Fair Play – The Interaction Between Humans and Machines in Recruiting

19.03.2020

For equality of opportunity – making recruiting fairer

In a global and digitally-connected world, aspects such as discrimination and fairness play a fundamental role. Inequality and lack of transparency remain common not only in our daily lives within society, but also represent formidable challenges in the job market as well.

Equality of opportunity is no longer just a concept and is also being implemented in law; in addition to the “Sustainable Development Goals” of the United Nations, it has also found its way into Germany with the framework of the General Equal Treatment Act. Among other things, this equality law stipulates that characteristics such as gender, origin or religion must not cause anyone to be disadvantaged.

As part of the FAIR Project, funded by the state of North Rhine-Westphalia and the European Commission, research is being conducted into how decision-making in recruiting can be made fairer with the help of automated recruitment processes. In order to evaluate whether and to what extent algorithms contribute to improving equal opportunities, a fundamental understanding of discrimination must first be developed. This calls for a concrete definition of the term to form a solid basis for this question.

Is being "fair" as easy as simply not discriminating?

Fairness is often a nebulous concept, and so it can be very complex to develop an objective standard: for example, different treatment of men and women on an individual basis may be conceivably justifiable, provided it adequately counteracts existing discrimination. How, then, should companies make legally compliant, efficient and fair decisions based on such a concept?

The FAIR Index allows for a measurement of discriminatory tendencies of both algorithms and people in application processes. In this way, the FAIR Index increases transparency regarding the status quo and shows how such patterns develop over time - as well as how they may be improved.

The FAIR Index - making discrimination visible

Companies have to make thousands of decisions every day, and so to survive in the marketplace it is important to limit the impact of the wrong decisions as much as possible. The terms “discrimination” and “fairness” in this sense are often used in reference to quotas; but is a simple indicator such as statistical parity, which calls for numerical equality across groups, meaningful enough for a complex construct such as “fairness”? If significantly more men apply for a job than women, statistical parity not only says little about potential discrimination, but may also result in the information being misinterpreted. Equal opportunities as a general measurement

The FAIR Index takes selection into account and reveals existing tendencies of factors which are sensitive to discrimination. As equality of opportunity requires a balance between groups, the question then arises: has anyone been mistakenly hired, and if so who may have been overlooked?

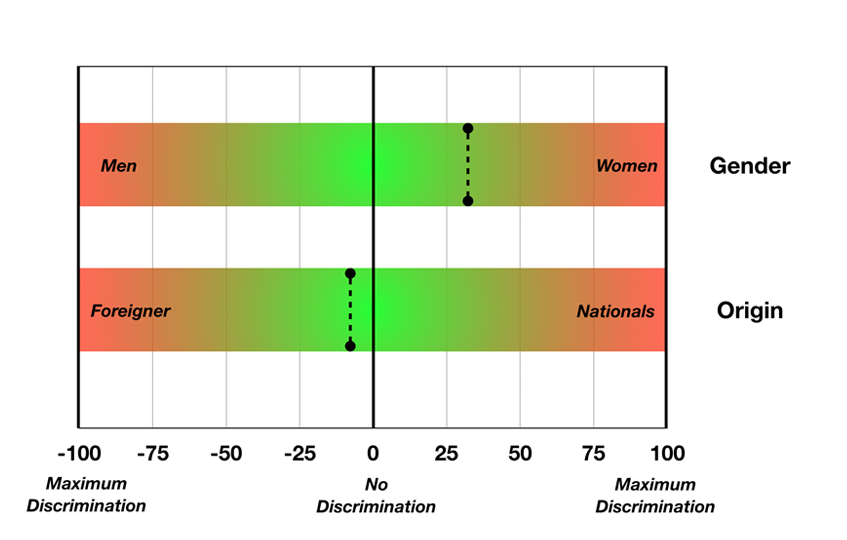

The figure above shows the extent of discrimination on a scale from 0 to 100: a FAIR index of 0 means that the number of "wrongly" hired and non-hired applicants is the same across the criterion. Taking the example of gender, this would mean that as many men as women did not "wrongly" get the job nor were "wrongly" hired. The extreme of 100, on the other hand, indicates complete discrimination against the respective group, either with regard to their gender or their origin.

Together for the best result

So that people can make decisions with machines, or even to make the decision themselves, it is important to consider certain ethical issues. It is often feared, for instance, that recruiting algorithms of the present continue forms of discrimination inherited from the past. Due to this fear, the numerous advantages of technology and how we can use it to improve our processes are often overlooked.

In the age of digitisation the time it costs to make an application is falling, thus recruiters now face hundreds more resumes than they did before. Because of this there is often little time left to individually assess the CV of each applicant. Unlike humans, algorithms can make decisions free from factors such as stress, fatigue or unconscious bias. However, it is crucial to transparently show the criteria on which these algorithms work and they must be continuously evaluated, e.g. based on the FAIR index.

Whether consciously or unconsciously, small missteps or overlooked details can continue in company structure or practice, accumulating over time. This is where the neutrality of an algorithm can be used and, as demonstrated by the FAIR project, applicant CVs can be fairly interpreted in a matter of seconds.

Combining the neutrality of the algorithm with human empathy and designing the application process to be FAIR(ER) - that is the aim of this project. We think that when it comes to AI-driven recruitment, we’re better together!