Progress thoughts: Virginia Sondergeld

16.10.2022

About the format:

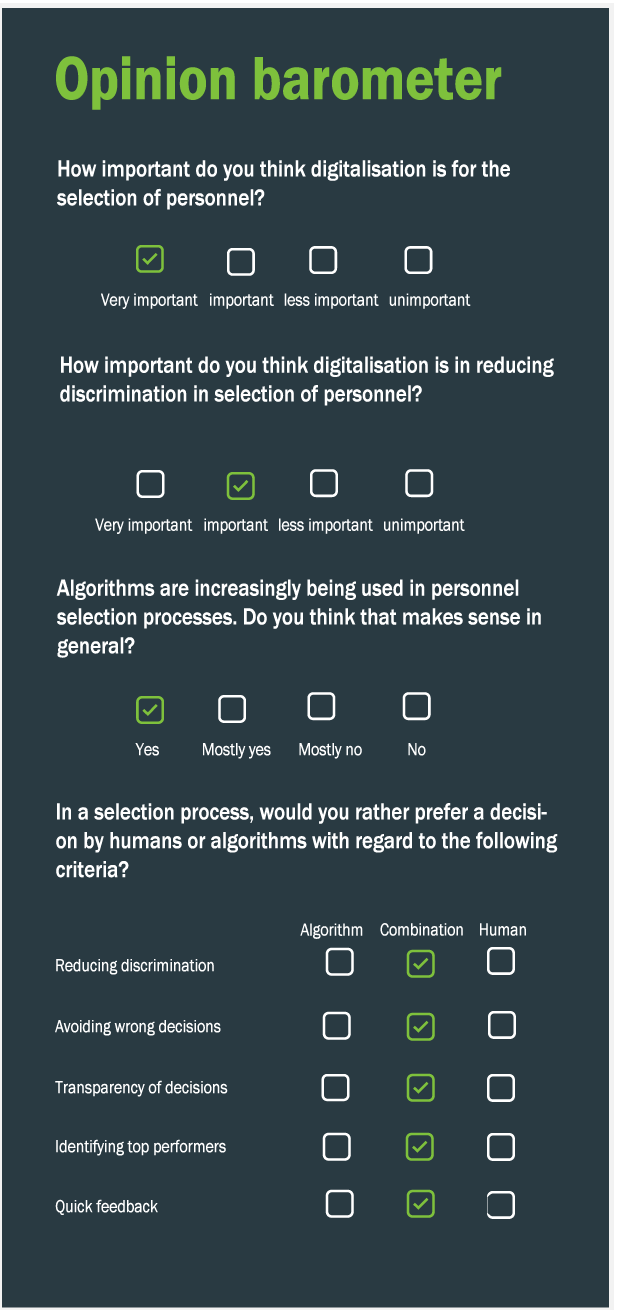

"Fortschrittsgedanken" appeared weekly on the FAIR.nrw blog in 2020 and presents the opinions of various experts from research and practice on questions that always remain the same. The primary topic is algorithms in personnel selection.

About the Author:

Virginia is a doctoral candidate at DIW Berlin and conducts research on labor markets and gender. She is the chair of The Women in Economics Initiative, which aims to support women economists in their professional development and provide a platform for dialogues on gender equality and diversity in economics. Prior to her PhD, Virginia worked as an Economic Analyst at NERA Economic Consulting. She holds a BSc Economics from Goethe University Frankfurt and an MSc Economics from the London School of Economics.

What opportunities do algorithms offer in recruitment and where are the risks?

What opportunities do algorithms offer in recruitment and where are the risks?

On the supply side, algorithms help to make the job search more efficient, e.g. through personalized recommendations, chatbots to answer routine questions. On the demand side, algorithms help to save resources in the pre-selection of applicants. Furthermore, they can supplement decisions to be made "by humans" and encourage their critical questioning. I see it as a challenge to recognize biases in models, which are due to biases in the training sets created by humans. Without correction, these will manifest themselves in the decisions of the algorithm. What remains to be answered here is the question of what criteria and measures of fairness we want to use to evaluate the algorithm's decisions and correct them accordingly.

What influence does discrimination have in personnel selection?

Germany has far-reaching legal regulations against discrimination that grant potential candidates corresponding legal rights. However, these measures are only as effective as they are used by those affected. Due to a lack of knowledge or against the background of perceived power imbalances, this sometimes happens inadequately. In my view, the topics of implicit biases and double standards remain important. The same actions or achievements are valued differently for people from different social groups. In recruiting, this leads to suboptimal and discriminatory decisions.

Can algorithms help to reduce discrimination in personnel selection?

I see potential to support decisions by algorithms especially in the area of implicit biases, as long as they explicitly aim at reducing these biases and are verified. In addition to the technical implementation, it is essential to educate responsible employees about the significance of implicit biases. A correction of one's own perception by a machine will only be accepted and appreciated by those who understand and want to address the weaknesses of their own assessment.

In concrete terms: What does the ideal process for personnel selection look like?

The ideal process starts with an advertisement that realistically maps the relevant position, required qualifications for applicants with diverse backgrounds. The resulting pool of candidates should be screened for diversity and suitability to identify potential self-selection bias. The selection process that follows the pre-selection based on CVs consists of elements that serve to assess professional competencies, the evaluation of which can be done with the help of algorithms, as well as personal elements. Here it is important to involve as many employees as possible from different roles in the team and to ensure an independent assessment.